Modeling the 3D shape of animals

The SMAL animal model

12 October 2020 Silvia Zuffi 5 minute read

We describe our work for modeling animal shape. This is joint work with Angjoo Kanazawa and Michael J. Black.

The rapid decrease of animal biodiversity is alarming. How can AI and computer vision contribute to the preservation of endangered species? Our contribution is the development of tools for the monitoring and understanding of animals from images, with the hope that such tools will be effective in supporting biological studies that aim at a better understanding of animals in their natural environment. Think for example if you can fly a drone, automatically detect animals, and also be able to estimate their shape and their 3D pose to ask questions like: are these animals female or male? Adults or juveniles? Are the females pregnant? What are the animals doing?

We have developed a 3D articulated parametric shape model of animals that we named SMAL, for Skinned Multi-Animal Linear model….does this sound familiar? Maybe many of you know about SMPL, the very famous Skinned Multi-Person Lineal model. Well, SMAL can be considered the “animal-equivalent” of SMPL.

A 3D articulated parametric shape model is the building block for model-based methods for image understanding. Why do we use model-based methods? Because animals are complex objects, and providing detailed answers about complex objects requires an a-priori model of them.

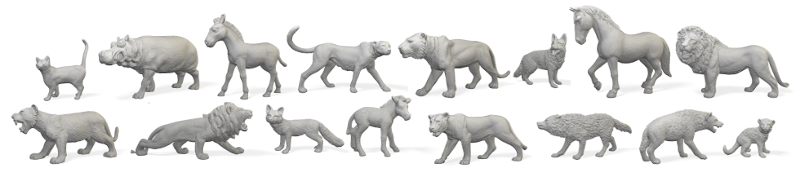

Like SMPL, which has been learned from thousands of human body 3D scans, SMAL is learned from data. But how to bring animals in the lab for 3D scanning?....Impossible. Also, which animal species to model? We really wanted to model lions and tigers! We decided to scan toys: they are easy to find, they do not bite, and they are actually very realistic. Below are the 3D scans of some of the toys we used.

Toys come in different poses and very different shapes, and we needed to find a way to align all of them in order to learn the SMAL model. How we did it is described in this paper: “3D Menagerie: Modeling the 3D Shape and Pose of Animals”. The image below shows the result of the alignment process on 6 of the 41 toys we used, where colors represent corresponding surface points.

In order to learn the animal shape space, correspondence is not sufficient. We need to separate shape variation due to what we call "intrinsic shape" from shape variation due to the different poses. To do this, we need to have the toys all in the same reference pose. Our alignment process also provides a way to do that. Below are the toys from above in the reference pose.

From the aligned pose-normalize data it is easy to use statistical models to create a mathematical representation of intrinsic shape variation, where shape can be specified with a low-dimensional shape variable. We learned this shape space for all the toys together, obtaining a single shape space that can represent different species. The video below shows the effect of interpolating the shape variables between different animal families.

Once we created SMAL, we fitted the model to images and videos of real animals, given annotations for sihlouettes and 2D keypoint locations. Here are some examples of SMAL fit to the famous images from Muybridge:

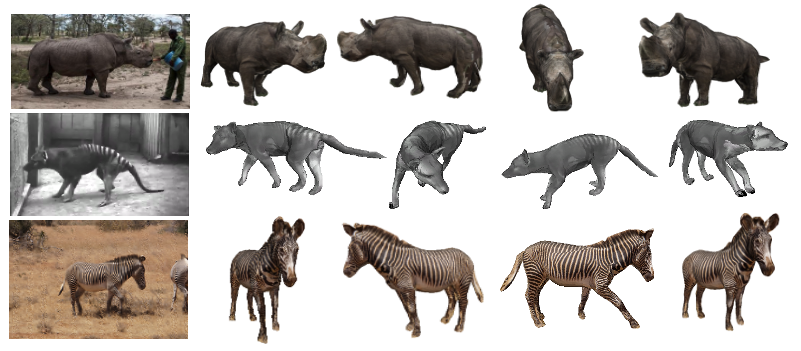

There are animals that cannot be found as toys, and they cannot also be scanned because they do not exist anymore. The Thylacine is an extinct animal known as the Tasmanian tiger; the last live animal was captured in 1933. We can use SMAL to provide an approximate shape for the Tasmanian tiger, and then use a set of images from which we extracted the silhouettes to “refine” the 3D model. We took frames of video recorded at the Hobart Zoo of the last Tasmanian tiger in captivity, and fit the SMAL model, plus some shape refinement to create a 3D model of the animal. This approach is called SMALR for "SMAL with refinement" and is described in the paper “Lions and Tigers and Bears: Capturing Non-Rigid, 3D, Articulated Shape from Images”.

A few years ago, in Kenya, the last male Northern white rhino died. His name was Sudan. We took pictures of Sudan and following the same approach we created a 3D model. Kenya is also where the Grevy’s zebra lives, an endangered species of zebra, with only a few thousand individuals left.

The image below shows Sudan, the Tasmanian tiger and the Grevy's zebra images and recovered 3D model.

We applied the SMALR method to images of several zebras obtaining a set of avatars that we can pose to create a synthetic dataset. With this we trained a neural network to estimate 3D pose and shape of the Grevy’s zebras from images. This work is described in the paper “Three-D Safari: Learning to Estimate Zebra Pose, Shape, and Texture from Images In-the-Wild". In the video below we applied the method to the frames of a video that we captured at the Mpala Research Center in Kenya.

The code for creating animal 3D models from images can be found here: smalr_online

The Perceiving Systems Department is a leading Computer Vision group in Germany.

We are part of the Max Planck Institute for Intelligent Systems in Tübingen — the heart of Cyber Valley.

We use Machine Learning to train computers to recover human behavior in fine detail, including face and hand movement. We also recover the 3D structure of the world, its motion, and the objects in it to understand how humans interact with 3D scenes.

By capturing human motion, and modeling behavior, we contibute realistic avatars to Computer Graphics.

To have an impact beyond academia we develop applications in medicine and psychology, spin off companies, and license technology. We make most of our code and data available to the research community.